A/B testing in sales outreach involves sending various versions of sales copy—typically emails or LinkedIn messages—to determine which one performs better. By varying one factor at a time (such as the subject line, call to action, or opening sentence), groups can discover what messaging will resonate with prospects the most and apply that knowledge to improve subsequent outreach.

But that's the catch: standard A/B testing procedures fall apart when you scale. Designing, deploying, and measuring numerous message variants by hand across hundreds or thousands of recipients is time-consuming, prone to human error, and often yields inconsistent or inconclusive results.That's where AI comes to the rescue.

OneShot.ai is one of the platforms that enable sales teams to create, test, and optimize messaging variants in an instant—by leveraging machine learning and real-time prospect data. This article will walk you through A/B testing outreach at scale with AI, from setup to optimization, with examples and frameworks that give measurable results.

What Is A/B Testing in Sales Outreach and Why Does It Matter?

Why is A/B testing critical for outbound success?

Outbound sales are never just one thing for all people. What resonates with a CEO in the U.S. might not resonate with a marketing director in Germany. Using A/B testing allows an SDR and a marketer to test (or validate) what type of message structure, tone, and content prompts a recipient to take action based on the actual behavior of the recipient.

How do traditional sales teams run A/B email tests?

Most sales teams are using static templates in a tool (like Outreach or Salesloft) and making some manual changes to them. A/B testing also occurs in silos, where the team is batching segments and then eyeballing results over time. While usable on small campaigns, these tests fall apart when scaled to a global marketplace or even multiple personas.

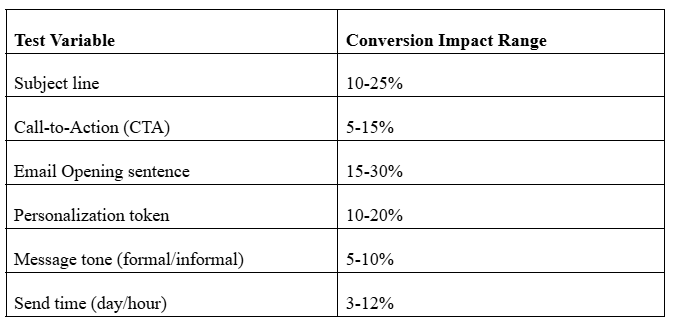

What are the most common A/B testing variables?

What Are the Challenges of A/B Testing Outreach at Scale?

What limitations do SDRs face when testing multiple variants manually?

Creating multiple variants manually is time-consuming. The SDR would have to create 5-10 versions of messages per persona, manage them across regions, and compare performance. All this takes time away from selling and time away from launching campaigns.

How do personalization demands clash with variant creation limits?

True personalizations (like mentioning a lead’s job change, tech stack, or pain point) really don’t scale -- especially when the rep is placing the variant into a template. The SDR is now either just sending a generic message or limiting their testing to the smallest of changes.

Why does data inconsistency skew A/B test insights?

When variables are not controlled (like the timing of each list, the current quality of the list, and the platform's sending), any results are considered unreliable. There are too many places for people to “learn” and be inconsistent in execution, which leads to inconsistencies and makes it difficult to know if anything really worked.

Struggling to scale personalized tests?

See how OneShot.ai’s Scaling Agent creates and deploys AI-powered variants instantly →

How Does AI Revolutionize A/B Testing for Outbound Sales?

What can AI do better than humans in A/B testing?

AI eliminates manual bottlenecks. It can:

- Generate dozens of message variants instantly.

- Adapt language/tone to individual personas.

- Track performance in real time

- Learn from past campaigns to optimize future ones.

How do OneShot.ai’s agents generate variants using real-time prospect signals?

OneShot.ai’s Persona Agent crafts messaging variations based on role, industry, pain points, and past engagement. For example, a CFO might receive a finance-focused ROI pitch, while a product leader gets a tech-efficiency message—even if they’re in the same region.

What’s the role of machine learning in test optimization?

Machine learning examines variant performance and finds statistically significant trends—such as subject lines that work better by industry or lengths of messages that boost click-through rates.

Can AI automate test delivery across platforms like LinkedIn, email, and calls?

Yes. OneShot.ai integrates with multichannel outreach platforms to deliver the right variant at the right time on the right platform—automatically.

How Can You Implement AI-Driven A/B Tests Step-by-Step?

What prep work is needed before launching an A/B campaign?

- Identify your test objective (open rate, click rate, replies, etc.)

- Segment your list (by persona, industry, geography)

- Select your variables (e.g., subject line, CTA, email body)

- Establish a repeatable test window (timing, platform)

How do you generate variant copy sets using OneShot.ai’s Persona Agent?

- Input your target persona, and the AI will:

- Draw in contextual cues (role, pain points, market)

- Recommend 3–5 customized variants with a distinct tone and CTA.

- Automatically map variants to campaign steps.

How do you ensure consistency in test delivery?

- Use OneShot.ai's Testing Logic Agent to:

- Randomly assign contacts to each variant group.

- Send at consistent times.

- Sync data across CRM and engagement tools

What KPIs and metrics should you track?

- Open Rate

- Click-Through Rate

- Response Rate

- Meeting Created Rate

- Variant Uplift %

Sidebar: Checklist for AI-Powered A/B Testing Launch

✅Defined target persona

✅ Outreach platform integrated

✅ 3–5 AI variants developed

✅ Success KPIs determined

✅ Variant testing logic integrated

Which AI-A/B Testing Strategies Deliver the Highest Uplift in Outreach?

What approaches compare human text vs AI-personalized messaging?

Test the human-written baseline emails against AI personalized variants. In a lot of cases, the AI variants experienced a 15–40% uplift due to personalized depth and matching tone.

How can you test by prospect segments?

In this case, you can segment leads by:

- Job title (i.e., CTO vs. RevOps)

- Geographic region (i.e., EMEA vs. APAC)

- Industry (i.e., healthcare vs. fintech)

- Conduct targeted variant tests in each of these segments to identify winning combinations.

What multivariate elements yield 2X+ engagement?

- High-capacity multivariate testing consists of: Subject line + Tone + CTA

- Pain points specific to a persona + Use case.

- Structure of the email body + Signature style

When should you stop or iterate on a live AI-driven test?

Stop a test when one variant reaches statistical significance. Engagement peaks or drops off, AI suggests a pivot based on pattern recognition.

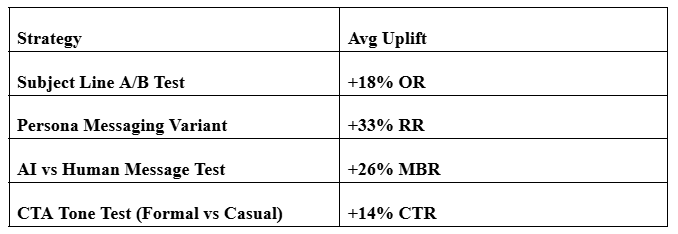

Test Strategy vs. Outcome Benchmarks

Want to deploy these strategies with zero code? Try A/B variant testing inside OneShot.ai today

What Are Real-World Examples of AI-Led A/B Sales Outreach?

What companies have seen conversion growth?

A martech company in the U.S. improved open rates by 41% and reply rates by 72% after testing 4 AI-generated subject lines and body copy variants with OneShot.ai.

How did a B2B SaaS seller boost pipeline quality?

Instead of sending spammy outreach messages, the team tapped into AI to personalize messaging to 3 personas—CTO, Product Manager, and CISO. Fewer meetings, but 2x-quality pipeline and 40%-higher close rates.

How Do You Analyze A/B Test Results from AI Outreach Campaigns?

What tools does OneShot.ai use to visualize test outcomes?

OneShot.ai provides dashboards comparing variant performance based on:

- Time

- Persona (type of recipient - from a single sender type, demographic, etc.)

- Channel

- Engagement metrics

What statistical significance benchmarks matter?

Use this information to:

- Lean into what's working, double down on high-performing variants.

- Pause or rewrite brands that are underperforming.

- Make subtle adjustments in the tone and call-to-action button for specific segments.

Ready to optimize every message you send? Book a personalized demo with OneShot.ai’s sales experts →

How Does OneShot.ai Enable Ongoing Optimization After A/B Tests?

How often should you refresh variant copy using the Insight Agent?

To maintain message relevance and engagement, OneShot.ai’s Insight Agent should be used to refresh variant copy every 2–4 weeks, depending on audience size and market dynamics.

It pulls in real-time feedback, prospect engagement trends, and campaign performance to recommend updates. This helps avoid “template fatigue” and ensures your messaging adapts with evolving buyer behaviors.

For high-volume outbound teams, weekly refreshes may be more efficient—particularly for dynamic industries such as SaaS or fintech, where messaging staleness can hurt open and reply rates.

Can you automate version rollouts across channels from winning variants?

Yes. OneShot.ai enables users to automate best-performing variant promotion on email, LinkedIn, and in-app chat workflows. A variant can be automatically scaled once it has achieved statistical significance (i.e., 95% confidence) through the Scaling Agent, reducing manual effort.

Your winning subject lines, intros, or CTAs can be used to deploy to the next set of leads on every platform—without guesswork or copy-pasting.

How does CRM feedback loop sync with AI to guide the next test run?

OneShot.ai connects natively with CRMs such as Salesforce, HubSpot, and Pipedrive, closing the feedback loop between outcome and outreach. Win/loss metrics, meeting results, and engagement signals are input directly into AI systems, enabling the platform to:

- Optimize future messaging based on what is converted.

- Tweak targeting for next A/B tests.

- Suggest messaging pivots for underperforming personas.

- This constant stream of data makes every A/B test a refinement of the previous one, pushing your outreach strategy forward.

Ready to run smarter, faster A/B tests without compromising compliance or creativity?

👉 Book your free strategy call at OneShot.ai and start optimizing outreach today.

FAQs

1. What is A/B testing in email marketing and how does AI improve it?

A/B testing in email marketing compares two or more versions of an email to see which performs better. AI enhances this process by automatically analyzing audience behavior, optimizing send times, and generating high-performing content variants in real time.

2. How does AI scale A/B testing for outreach campaigns?

AI allows marketers to test thousands of outreach variations simultaneously. Using machine learning, it quickly identifies winning messages, subject lines, and calls-to-action based on open rates and click-through data, enabling outreach at massive scale.

3. What are some best practices for A/B testing email campaigns with AI?

Best practices include setting clear goals, testing one variable at a time, using AI to segment audiences, and analyzing engagement metrics. AI tools can even predict which message variant will perform best before deployment.

4. How can AI optimize subject lines in A/B testing?

AI tools use natural language processing (NLP) to analyze tone, emotion, and engagement history. They then suggest or generate subject lines that maximize open rates, helping marketers achieve higher click-through rates (CTR).

5. What metrics should I track when running AI-driven A/B tests?

Key metrics include open rate, click-through rate, conversion rate, and unsubscribe rate. AI can automatically analyze these data points to recommend ongoing improvements for future outreach campaigns.